Three Approaches to Testing and Evaluating LLMs-Base Agents

Hello everyone, it’s been a while! As we all know, the capabilities of Agents built on large models are extremely unstable, and this year our company is planning to integrate Agent capabilities into our products. Before introducing them, we need to design a testing framework to see if the fault tolerance rate can meet our target threshold in various scenarios. So, I’ve researched several testing solutions, and this article is a summary of my findings.

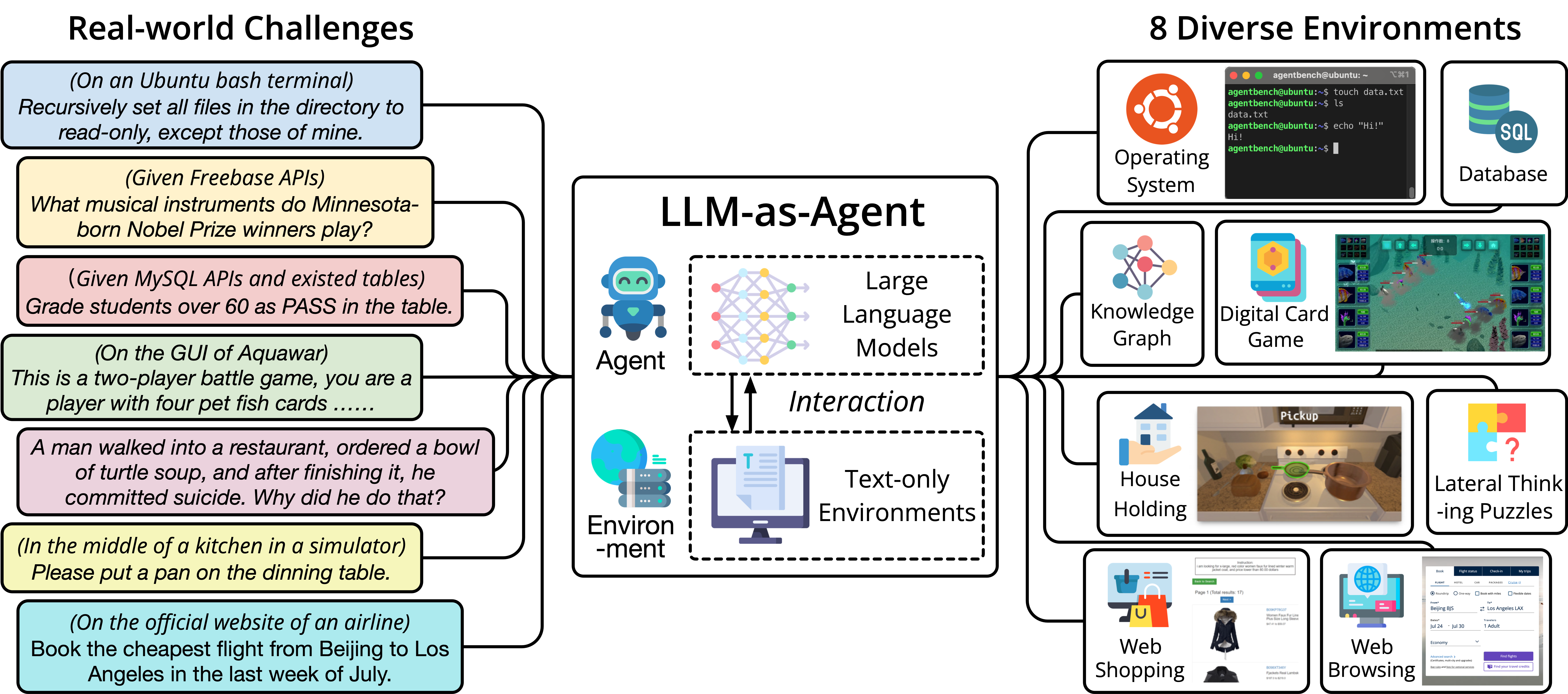

AgentBeach

Let’s start with AgentBeach, a testing tool designed by researchers from Tsinghua University, The Ohio State University, and the University of California, Berkeley. AgentBench includes 8 environments:

- Operating System (OS): Tests the LLM’s ability to perform file operations and user management tasks in a bash environment.

- Database Operations (DB): Evaluates the LLM’s ability to execute operations on a specified database using SQL.

- Knowledge Graph (KG): Checks the LLM’s ability to extract complex information from a knowledge graph using tools.

- Digital Card Game (DCG): Assesses the LLM’s ability to make strategic decisions in a card game based on rules and the current state.

- Lateral Thinking Puzzles (LTP): In this game, the LLM needs to ask questions to guess the answer, testing the LLM’s lateral thinking ability.

- Home Environment (HH): In a simulated home environment, the LLM needs to complete daily tasks, mainly testing the LLM’s ability to break down complex, high-level goals into a series of simple actions.

- Web Shopping (WS): In a simulated online shopping scenario, the LLM needs to complete shopping tasks based on requirements, mainly assessing the LLM’s autonomous reasoning and decision-making abilities.

- Web Browsing (WB): In a simulated web environment, the LLM needs to complete complex tasks across websites based on instructions, examining the LLM’s capabilities as a web agent.

These evaluations help understand and verify the performance of large model-based Agents in different environments and tasks. The operating system and database operations belong to basic capability tests, characterized by simple operation environments and pure information; knowledge graphs and digital card games belong to advanced capability tests, characterized by simple operation environments but relatively complex information; lateral thinking puzzles, home environments, web shopping, and web browsing are scenarios where operation environments are relatively complex, and information is also relatively complex, representing high-level capability tests for Agents.

For enterprise-level Agent scenarios, it’s not necessary to strictly follow the above environments for differentiation, but it’s important to categorize your own demand scenarios based on low, medium, and high fault tolerance rates, set corresponding pass rate metrics, and decide whether to adopt the Agent service from the other party after testing.

AgentBeach Dataset, Environment, and Integrated Evaluation Package Release Address

ToolEmu

ToolEmu is primarily a security test for large model-based Agents. ToolEmu has designed a simulation framework that detects the performance of LLM-Base Agents in various scenarios by simulating a diverse set of tools, aiming to automatically discover fault scenarios in the real world and provide an efficient sandbox environment for Agent execution. ToolEmu includes an adversarial emulator, specifically designed to simulate scenarios that may cause large model agent failures, allowing developers to better understand and improve the weaknesses of the agent. This approach can effectively identify potential serious faults in the real world. In addition, there is an automatic safety evaluator that quantifies the severity of risks by analyzing potential dangerous operations during the agent’s execution process.

For Agent products to be integrated into products, security aspects can refer to this project for testing.

Agent Execution Trajectory Evaluation

If AgentBeach is a general capability test for large model-based Agents, then Agent execution trajectory evaluation (Agent Trajectory Evaluation) comprehensively evaluates the Agent’s performance by observing a series of actions and responses taken by the Agent based on a large model during the task execution process. This method is used to assess the Agent’s logic and efficiency in problem-solving, as well as whether it has chosen the correct tools and steps to complete the task.

The role of Agent execution trajectory evaluation lies in:

- Comprehensiveness: It not only considers the final result but also focuses on every step of the process, providing a more comprehensive assessment.

- Logic: By analyzing the Agent’s “thinking chain,” we can understand whether its decision-making process is reasonable.

- Efficiency: It evaluates whether the Agent has taken the fewest steps to complete the task, avoiding unnecessary complexity.

- Correctness: Ensures that the Agent has used the appropriate tools to solve the problem.

Here is a code example of Agent execution trajectory evaluation in LangChain:

1 | # Import the evaluator module from LangChain |

Additionally, the idea of tracking Agent trajectories has another clever use, which is to fine-tune models focused on specific vertical scenario Agent tasks. This approach corresponds to a technique called FireAct (KwaiAgents is also following a similar path), which uses 500 Agent operation trajectories generated by GPT-4 to fine-tune the Llama2-7B model, improving its performance on the HotpotQA task by 77%. Midreal AI (a novel generation product that can generate real novels with both logic and creativity online, and even adds interactive capabilities, allowing you to choose the direction of the plot at key points and generate an accompanying illustration) also uses FireAct technology.

If you find the content good, subscribe to the email and RSS, and share the article~

Three Approaches to Testing and Evaluating LLMs-Base Agents